In the last year the Docker project got a lot of adoption due to its simpler and streamlined use flow, portability across systems, application isolation, lower resources footprint, container versioning and the list can go on. A big part of this rise in adoption is also due to its team paying more attention to the community and community events. I myself got started after a local Docker community meetup, where a discussion with a mentor helped me understand the Docker ecosystem and flow.

An aspect I really like about working with Docker is the fact that it solves a real problem, mainly found in long term projects: the time spent configuring a working environment for the project. One recurring issue I keep seeing in projects is that each time we need to add a new environment, either because a new team member joins the project or we need a new test environment, we lose a lot of time looking for a specific version of the software and then configuring it. This is an even bigger issue if the project relies on older versions of the software. For example, PHP 5.3, as it was the case for many projects using Magento 1.

Some colleagues might argue that tools like Ansible, Chef, or Puppet have undergone significant improvements, enabling the automation of machine provisioning.. This is true for virtual machines as well as local development environments.

Automation tools seem to be solving the problem of wasted time when searching for specific versions of software. However, since all these tools depend on downloading the necessary software during machine provisioning, they cannot assure the installation of precisely the same software versions. Applications evolve during their lifetime, adding improvements or dropping less used functionalities. Software repositories can be moved or even shut down – see the case with left-pad npm package – and thus we get back to square one. Of course, we can build our own software repositories, but that has its own set of issues and it’s more of a solution for big companies than for small teams.

Since Magento projects are usually ongoing or long term projects, this is an issue that needs to be taken into account or it can escalate into a serious problem.

Docker addresses this issue quite well by using something it defines as “images”. Once an image is built, maybe at the start of a project, that same image can be distributed to the entire team or it can spawn new environments that can be easily removed when they are no longer needed.

Using Docker images, a new developer joining the team can start working in minutes, regardless at what stage of the project that he/she comes in. Using Docker for a development environment aids new developers in commencing tasks or analyzing the project using the very same environment as their colleagues.

With Docker images, we are no longer constrained to manage large software repositories. Additionally, it simplifies the process of sharing an environment with team members, even if they are dispersed across the globe.

Setting up Docker for Magento 2 applications

A Docker community accepted workflow is to use a single service [1] per container. By decoupling the three main services needed by a Magento application, the PHP engine, the server and the database, we notice another big advantage offered by Docker: switching between different versions of these dependencies becomes easier and with no impact upon the project. Thus, Docker enables us to test a new version of the database, a different PHP version. Or even an alternative stack architecture without concerns about compromising our setup during a rollback.

The team at Evozon has prepared a quick Docker bootstrap project for Magento 2 following the one service per container rule. The structure is displayed below:

```[Project Folder]|| .project-env| docker-compose.yml| ...+---.docker| | .project-env.dist| || +---database| | | Dockerfile| | || | +---data| | | | .gitkeep| | || | \---setup| | 20170111114749-Magento-21-Default-Schema.sql| || +---nginx| | | Dockerfile| | || | +---conf| | | nginx.conf| | | upstream.conf| | || | \---sites-enabled| | project.conf| || \---php| | Dockerfile| || +---conf| | php.ini| | project.pool.conf| | xdebug.ini| || \---logs| .gitkeep|+---app| ...+---bin| ...+---dev| ...+---lib| ...+---phpserver| ...+---pub| ...+---setup| ...+---update| ...+---var| ...\---vendor```NOTE: the Docker bootstrap project for Magento 2 is available on the Evozon Github repository under the MIT licence.

As we can notice from the structure above, we will build three containers: one for Nginx, one for PHP and one for the database containing the MySQL server.

When building the services defined in the project’s `docker-compose.yml` file, Docker makes use of environment variables to configure the database service. To help us, we can find a template file under `.docker/project-env.dist`.

Just copy the file to the project’s root folder, rename it by removing the `.dist` part from its name and edit the existing values or add new ones as needed.

NOTE: Be careful – this file contains environment variables specific to each individual system and should be kept out of the code repository.

Now, by running a `docker-compose up -d` command, we will get a development environment ready for us to start working on our new Magento 2 module.

Configuring the Docker services for Magento 2 applications

As we notice, the Docker startup project for Magento 2 proposed by the Evozon team uses the good practices established by the Docker community and it splits the stack into three different containers.

Every container possesses its dedicated space for configuration files, and they are all pre-configured for use by the respective containers.

This project also automatically configures a default database for a Magento 2.1 application. So we can skip all the installation steps.

If we need to make changes to the default database schema, we can add additional SQL scripts inside the `.docker/database/setup` folder. To use an existing database, we can change the folder where the container is storing the data to the folder where the existing MySQL instance stores its data.

The MySQL container is configured to automatically use the new scripts in alphabetical order or the MySQL data files in case they are provided.

Configuring XDebug and PHPStorm

The bootstrap project for Magento 2 automatically installs XDebug when building the PHP image and container and it loads the XDebug configuration file from `.docker/php/conf/xdebug.ini`. If we need to change any XDebug configuration or add additional options, we can do this by simply editing this file and restarting the PHP container.

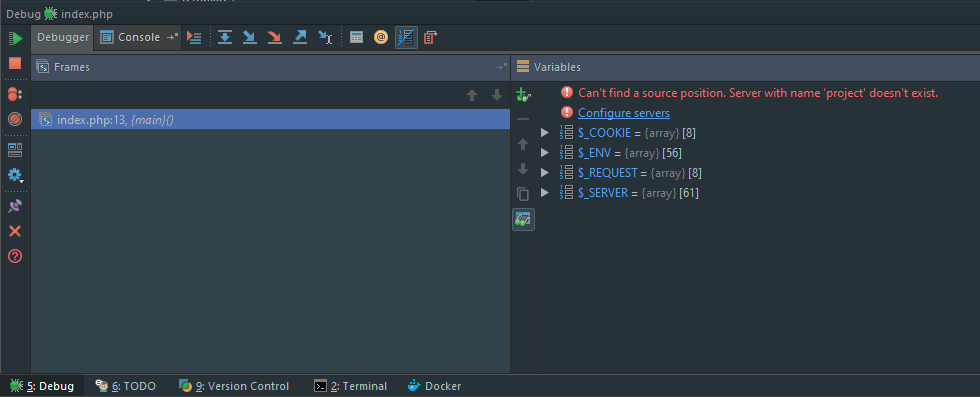

Once we’ve configured XDebug, we need to instruct PHPStorm to listen for all incoming connections. We do this by clicking the `Run > Start Listening For PHP Debug Connections` menu. The first time we initiate an action that necessitates Magento’s execution, a dialog similar to the one below will appear:

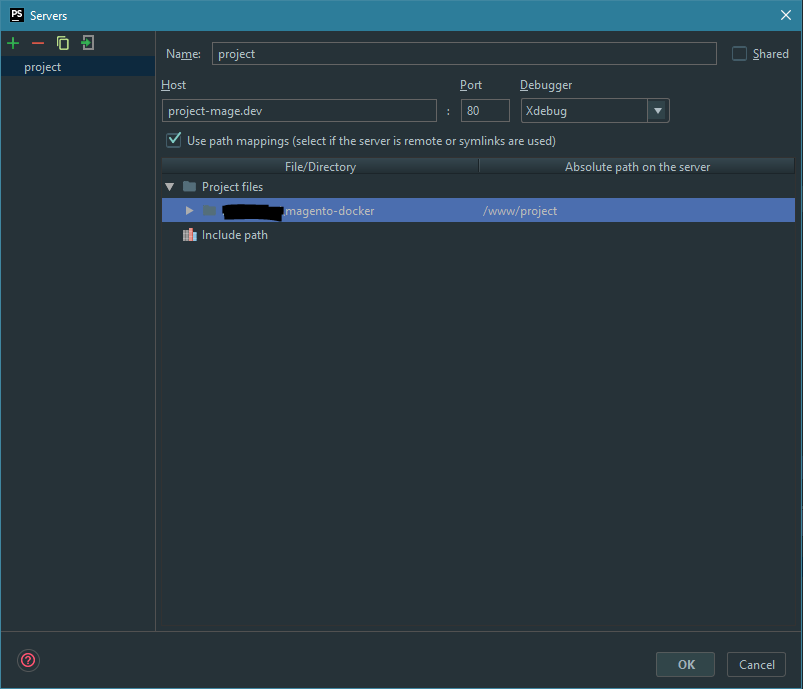

After selecting the corresponding server configuration, or creating a new one if needed like in the image below, we can proceed with the debugging process.

To investigate any issues with the XDebug connection, we can enable the XDebug log by uncommenting the `xdebug.remote_log` line in the `.docker/php/conf/xdebug.ini` file. Do not forget to restart the PHP container by running `docker restart container_id` – replace container_id with the ID of the container on your machine.

Different environments, scaling and an always evolving project

As time progresses, projects evolve, and requirements change. We may start with only three Docker services for our Magento application, but, as the shop gets an increasing number of visitors, we may require adding some caching capabilities. This means that the development environment will have to change. By using Docker, this change will only require adding a new service definition in the `docker-compose.yml` file for the new caching service. The new service will then be created automatically on all the environments where the new configuration is deployed.

How about adding additional web servers or clustering the database? For once, it’s as easy as it sounds. We just add the new services into the Docker configuration files – `docker-compose.yml` and, if needed, create a `Dockerfile` for each new service. We then update each system’s configuration as needed, configuring the database cluster or configuring the web server proxy.

The last step now is to distribute the new or updated files to the other team members and Docker will handle it from there.

Different teams will have different needs. For example, the front-end team will require an additional set of tools to complete their work. With Docker we can easily handle different environment needs by using a `docker-compose.override.yml` file. Here, we can define the additional containers needed to build the front-end part of our Magento 2 shop. This feature’s advantage is that it creates containers exclusively for the front-end team, without the back-end or testing teams being aware of them. Therefore, we can maintain a clean environment for each team and avoid allocating resources to tools that are unnecessary.

Final notes

Docker is quickly gaining grounds in the virtualization environment field due to its impressive list of benefits, a few of which we’ve seen in this article:

- same exact environment across the entire development team, including new members;

- quick ramp-up of new team members due to the already built infrastructure;

- easy spawning and removal of environments;

- environment standardization and implementation of version control.

A few other noteworthy benefits that Docker provides are the simplified configuration system for building template images, a leaner learning curve, application isolation and speed improvements over traditional virtual machines.

While we can go on and on listing the benefits of Docker, we can agree that working experience leads to faster learning. With this in mind, head on over to the Evozon Github repository, download the bootstrap project for Magento 2 and let us know what you think in the comments section below.

PS: pull requests for fixes and new functionalities are always welcome.

[1]: A service, in Docker terms, is any one application or system that can perform a task. This means that a service can be any application, like Nginx or PHP, or an entire system composed of multiple applications running as a single application – e.g. Gitlab is a single system composed of multiple smaller applications.

Article written by Denis Rendler